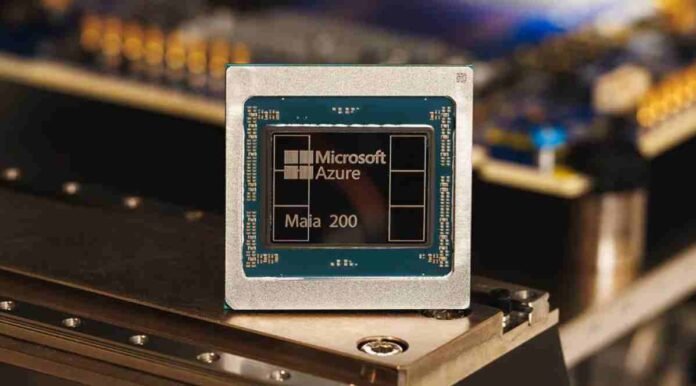

- Microsoft launched Maia 200, a new in-house AI accelerator designed for large-scale inference on Azure

- It features 216GB HBM3e memory and targets high efficiency with FP4 and FP8 performance

- Microsoft claims it beats key rivals from AWS and Google on narrow precision benchmarks

- Maia 200 is already powering Copilot workloads and is rolling out to US datacenter regions first

Microsoft has pulled the curtain back on Maia 200, its latest in-house AI accelerator designed to power the next wave of large-scale inference and model serving. Positioned as the successor to Maia 100, the new chip is being pitched as a major leap forward for Azure, and a clear signal that Microsoft wants tighter control over the hardware running its most demanding AI workloads.

The company says Maia 200 is built to reshape the cost equation for running massive AI systems at scale. In simple terms, it aims to deliver more performance per watt, more throughput per chip, and better overall efficiency for the kinds of workloads that are now dominating cloud demand.

That matters not just for Microsoft’s own products, but for customers who are increasingly looking for alternatives to the crowded GPU market.

With Maia 200, Microsoft is also sharpening its competitive edge against rival cloud giants. Amazon Web Services continues to push its Trainium lineup, while Google is doubling down on its TPU ecosystem.

Maia 200 is Microsoft’s answer to that arms race, giving Azure another serious option for AI teams looking to deploy models faster and more economically.

What makes Maia 200 different

Microsoft is going big on specs, and for once, the numbers do most of the talking. Maia 200 reportedly packs more than 100 billion transistors and is manufactured on TSMC’s 3nm process. It also includes native FP8 and FP4 tensor cores, reflecting a growing industry shift toward narrow precision compute, especially for inference where efficiency is king.

One of the standout upgrades is memory. Maia 200 comes with 216GB of HBM3e memory delivering up to 7TB per second of bandwidth. That is a crucial detail because memory bandwidth is often the bottleneck for modern AI inference, especially when running large language models that constantly pull massive weight matrices in and out of memory.

On chip SRAM also gets a notable mention, with 272MB available. Combined with a redesigned memory system and specialized high bandwidth data movement features, Maia 200 is built to keep more of the model close to the compute. The practical result is that fewer chips may be needed to run a model efficiently, which can reduce cost and complexity across the board.

Microsoft claims the chip can deliver more than 10 PFLOPS in FP4 and roughly 5 PFLOPS in FP8. That level of throughput is aimed squarely at the largest AI models in production today, with enough headroom for the even heavier systems that are already on the horizon.

Performance claims and cloud competition

Microsoft is not being subtle about who Maia 200 is targeting. The company says Maia 200 offers three times the FP4 performance of third generation Amazon Trainium hardware. It also claims FP8 performance that exceeds Google’s seventh generation TPU.

Those comparisons are clearly designed to position Maia 200 as a serious contender in the custom silicon race. It is not just about raw speed, either. Microsoft is framing Maia 200 as its most efficient inference system yet, which is arguably the more important selling point in a world where power availability is becoming a limiting factor for AI growth.

The architecture is also tuned for the realities of modern AI workloads. Microsoft points to a memory subsystem centered on narrow precision datatypes, a specialized DMA engine, on die SRAM, and a dedicated network on chip fabric built for high bandwidth data movement. The goal is straightforward: reduce the amount of time the chip spends waiting on data, and keep execution pipelines full for longer.

If those efficiency gains translate cleanly into real world deployments, Maia 200 could become a major lever for Azure customers looking to scale inference without watching their cloud bills explode.

Rolling out now with Copilot in mind

Microsoft is already using Maia 200 internally to support AI workloads in Microsoft Foundry and Microsoft 365 Copilot. That is an important point, because it suggests the chip is not just a lab experiment or a future roadmap slide. It is being deployed into production environments where performance and reliability are non negotiable.

Wider customer availability is expected soon, and Microsoft has started rolling Maia 200 into its US Central datacenter region. The company also plans to expand deployments to its US West 3 region near Phoenix, Arizona, with more regions set to follow.

For developers and researchers who want to get hands on early, Microsoft is offering a preview of the Maia 200 software development kit. The company is inviting academics, developers, frontier AI labs, and open source model contributors to apply.

That move mirrors what competitors have done in the past, building early momentum by letting the AI community experiment and optimize workloads before broad commercial rollout.

Maia 200 is not just another chip announcement. It is part of Microsoft’s larger plan to make Azure a more compelling home for large scale AI, especially as businesses demand faster inference, lower costs, and more predictable access to compute.

Follow TechBSB For More Updates