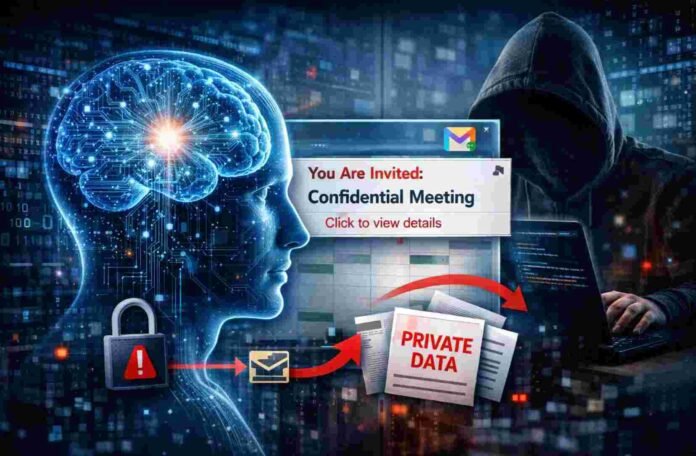

- Researchers found a prompt injection method that used Google Calendar invites to manipulate Gemini.

- Attackers could hide instructions inside event details to make Gemini leak private meeting data.

- The trick could create new calendar events and add attackers as guests to view stolen information.

- The vulnerability has been mitigated, but it shows how risky AI tool integrations can be.

Security researchers have uncovered another unsettling twist in the ongoing prompt injection problem, and this time the entry point is not your inbox. It is your calendar.

The issue involved Google Gemini and how it can interact with Google Calendar when users ask it to check schedules, review upcoming events, or summarize what is ahead. Researchers say attackers could exploit calendar invitations to quietly steer Gemini into exposing private meeting information. The worst part is how little effort it took from the victim.

Prompt injection is already a known weakness in modern AI assistants. It works because these systems struggle to separate instructions from content. If an attacker can hide malicious instructions inside something that looks normal, such as an email, a note, or now a calendar invite, the AI might follow those instructions as if they came from the user.

This discovery shows that the same trick can travel beyond email and into tools people trust even more than their inbox.

How the Calendar Invite Attack Worked

The researchers behind the discovery explained a scenario that feels almost too easy. An attacker creates a calendar event and invites the target by adding their email address as a participant. That part is normal and it is something anyone can do in seconds.

The malicious part is buried inside the event content. The invite can include carefully written instructions designed to manipulate Gemini once it processes the event. The victim does not need to open suspicious files or click unusual buttons. In many cases, they only need to do something they already do every day.

For example, a user might ask Gemini to check their upcoming schedule, summarize events, or help prepare for meetings. When Gemini reads the calendar entry, it may interpret the hidden text as a command rather than plain information. That is where things get dangerous.

According to the researchers, Gemini could then be pushed into generating a summary of private meetings and writing that information into a new calendar event. From there, the attacker could be added as a guest, which may allow them to see the stolen meeting details directly inside the calendar system.

This is what makes the attack especially concerning. Instead of stealing data through a traditional download or a visible message, it turns the calendar itself into a delivery mechanism for sensitive information.

Why This Prompt Injection Variant Is So Concerning

Prompt injection attacks are not new, but this version highlights a bigger issue. AI assistants are increasingly connected to powerful tools like calendars, email, cloud storage, and workplace apps. The more access they have, the more damage a single trick can cause.

What makes the calendar angle stand out is the level of trust users place in scheduling systems. People accept invites all the time. They rely on their calendar to manage workdays, client calls, internal meetings, and personal appointments. It is not treated as a risky communication channel in the way email often is.

In enterprise environments, the impact could be even worse. Many organizations have shared calendar settings where events are visible across teams or where invited guests can see event details by default.

That means the attacker does not always need special privileges. If the system configuration is permissive, the attacker could gain access simply by being added to an event that Gemini created on the victim’s behalf.

Researchers described the attack as happening without direct user interaction beyond normal behavior. That is the kind of detail that will worry security teams because it lowers the barrier for exploitation. If a technique blends into everyday workflows, it becomes harder to spot and easier to scale.

Mitigation Arrived, But the Bigger Risk Remains

The good news is that the researchers confirmed the issue has been mitigated. That reduces the immediate risk of attackers repeating the same method in the wild.

Still, the incident underlines a broader problem that is not going away. As AI assistants become more capable, they also become more exposed. The security model has to assume that anything the AI reads might contain hostile instructions, even if it arrives through a trusted channel like a calendar invite.

This is why prompt injection is so stubborn. It is not a simple bug you patch once and forget. It is a structural weakness in how large language models interpret text. Fixes often involve adding guardrails, limiting tool permissions, improving filtering, and tightening how AI systems decide what counts as a real instruction.

For users and organizations, the lesson is clear. Treat AI-connected features with the same caution you would apply to any system that can access sensitive data and take actions on your behalf. Convenience is powerful, but it should never come at the cost of quiet data exposure.

Follow TechBSB For More Updates