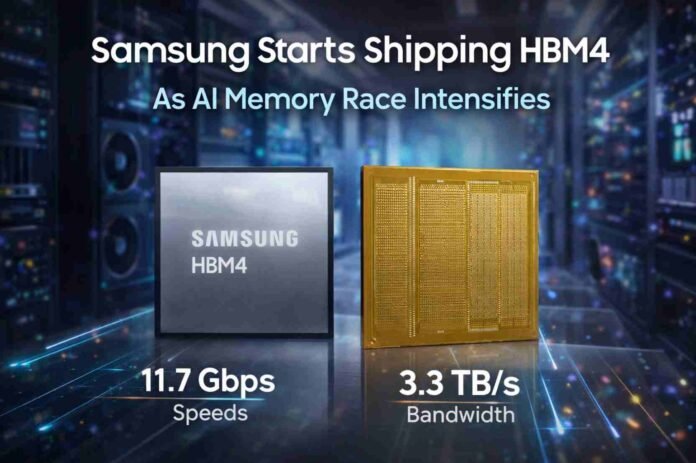

- Samsung has begun commercial shipments of HBM4, claiming an industry first.

- HBM4 delivers up to 11.7Gbps speeds and 3.3TB per second bandwidth per stack.

- Capacity reaches 36GB now, with 48GB versions planned.

- Samsung targets stronger AI and data center demand through 2026 and beyond.

Samsung says it has begun commercial shipments of its next-generation high-bandwidth memory, HBM4, claiming an industry first as competition in AI memory accelerates.

The company confirms that mass production is already underway, with initial units delivered to customers building advanced AI accelerators and data center GPUs.

HBM4 arrives at a moment when AI model sizes, training clusters, and inference workloads are stretching the limits of existing memory technologies. Bandwidth, density, and power efficiency are now critical differentiators for chipmakers and hyperscalers alike.

Samsung’s latest move signals its intent to secure an early foothold in what is fast becoming the most strategic battleground in semiconductor hardware.

Built on advanced nodes for higher yields and headroom

Unlike incremental updates that rely heavily on mature processes, Samsung says HBM4 is built on its sixth generation 10nm class DRAM technology combined with a 4nm logic base die.

According to the company, this pairing helped it achieve stable yields as production ramped without requiring a fundamental redesign mid cycle.

That is a bold technical claim. In practice, real world validation will come as large deployments begin and performance metrics emerge from independent testing.

Still, the use of advanced nodes suggests Samsung is pushing beyond conservative scaling strategies in favor of performance headroom.

Sang Joon Hwang, Executive Vice President and Head of Memory Development at Samsung Electronics, said the company chose not to follow the conventional route of extending proven designs.

Instead, it adopted what he described as its most advanced process technologies to unlock future scalability and customer flexibility. The emphasis appears to be on long term competitiveness rather than a short term spec bump.

Higher speeds, wider bandwidth, and more capacity

On paper, HBM4 delivers a significant jump over previous generations. Samsung states that the new memory reaches transfer speeds of 11.7Gbps, with potential headroom up to 13Gbps depending on configuration.

For context, the broader industry baseline has hovered around 8Gbps, while HBM3E typically operates near 9.6Gbps.

Bandwidth per stack climbs to 3.3TB per second. That represents roughly 2.7 times the throughput of earlier HBM generations, a leap that could directly translate into faster model training times and improved inference throughput for large scale AI deployments.

Capacity also sees a notable increase. Current 12 layer stacks range from 24GB to 36GB. Samsung plans to introduce 16 layer variants, which could push capacity to 48GB per stack.

For AI accelerators where memory constraints often limit model size or batch processing, that density boost could reduce the need for complex multi chip scaling.

However, performance gains in HBM do not come without trade offs. Pin counts have doubled from 1024 to 2048 in this generation.

More pins typically mean greater complexity in power delivery and signal integrity. Samsung says it has addressed this through low voltage through silicon via technology and refinements in power distribution.

The company claims an improvement in power efficiency of roughly 40 percent compared with HBM3E. Thermal management has also been reworked, with structural changes designed to enhance heat dissipation and maintain reliability under sustained high bandwidth workloads.

Scaling production and looking ahead to HBM4E

Beyond the technical specifications, Samsung is leaning heavily on its manufacturing scale and integrated packaging capabilities. The company highlights coordination between its foundry and memory divisions, as well as collaboration with GPU makers and hyperscalers developing custom AI hardware.

This vertical integration could prove decisive as demand for HBM accelerates through 2026 and beyond. Supply constraints have defined previous HBM cycles, and any vendor able to guarantee volume while maintaining yields stands to capture outsized market share.

Samsung has already outlined its next steps. HBM4E sampling is planned for later in the year, with custom HBM variants expected to follow in 2027. That roadmap suggests the company is preparing for increasingly specialized memory configurations tailored to specific AI accelerators.

Whether rivals respond with faster timelines or alternative architectures will determine how long Samsung’s claimed first mover advantage holds.

For now, the start of commercial HBM4 shipments marks a significant milestone in the AI hardware race, one that underscores how central memory has become to the future of high performance computing.

Follow TechBSB For More Updates