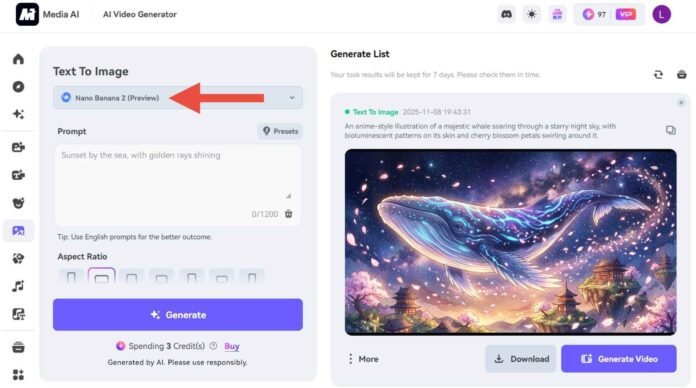

- Google’s new Nano Banana 2 model enhances AI image generation with self-correcting intelligence.

- The model can fix text and design details without altering the rest of the image.

- Nano Banana 2 adopts a multi-step workflow that mimics human creative thinking.

- Early previews show more realistic and refined images across Google’s Gemini ecosystem.

Google is taking another big leap in the world of AI-generated images with its new model called Nano Banana 2. The model, which recently surfaced in preview form, is expected to arrive as part of the Gemini app.

Early previews suggest that Google’s latest image generator is about to set a new benchmark for creativity, control, and realism.

A New Way to Think and Create

Nano Banana 2 is not just an update to its predecessor. It marks a deeper shift in how Google wants AI to create art. Instead of simply generating an image from a prompt, the model now plans its output step by step.

It first visualizes the concept, then refines it through self-correction before showing the final version.

This change might sound small, but it represents a huge philosophical leap. Google is teaching its AI to act like a designer who drafts, reviews, and fixes their own work before presenting it. The result is a smarter model that learns from its own mistakes in real time.

Better Control and More Realistic Details

Early testers who have accessed the preview say Nano Banana 2 feels more stable and natural. It offers better control over angles, viewpoints, and lighting. Colors appear sharper and more balanced. Users can even fix text errors in an image without changing everything else around it.

The first Nano Banana model gained attention for creating lifelike action figure-style portraits. Nano Banana 2 seems to continue that trend with greater accuracy. The characters in shared preview images look more consistent and expressive, holding their shape even when the perspective changes.

One of the most talked-about improvements is the way the model handles fine details. Where older AI models struggled with hands, reflections, and proportions, Nano Banana 2 appears to manage them with surprising accuracy. It makes sense that Google wants this tool to feel like a genuine creative assistant, not just an image generator.

Self-Correction and Human-Like Thinking

The standout feature of Nano Banana 2 is its self-correcting workflow. The model now reviews its own output, identifies flaws, and automatically refines them. This loop continues until the AI decides the image is ready to share.

This built-in quality check is a major upgrade for Google’s AI ecosystem. It mirrors how human artists think. They sketch, analyze, and revise. Now, Google’s model seems to be doing the same.

Behind the playful name, the technology is serious. Google is giving its AI tools the ability to understand scenes more deeply. That includes awareness of objects, backgrounds, and even the relationships between them. It could be the start of a new generation of AI design tools that understand context, not just composition.

Expanding Beyond Gemini

While the Nano Banana 2 preview is currently linked to Google’s Gemini app, traces of it have been found in other experimental products. Testers have spotted signs of the model in Whisk Labs, a creative testing platform under Google’s umbrella.

This multi-surface rollout strategy echoes what Google did with the original Nano Banana model. At first, users discovered it quietly improving image quality across several apps. If history repeats itself, Nano Banana 2 may soon appear in everyday Google tools before it officially launches.

Leaked code references have also revealed something called Nano Banana Pro, which could be a more advanced version for high-end or high-resolution work. That hints at a possible premium tier of Google’s AI image technology for professional creators.

The Rise of GEMPIX 2

Internally, Nano Banana 2 is also known as GEMPIX 2, connecting it to the Gemini family of creative models. This name suggests deeper integration with Google’s broader AI ecosystem. If Nano Banana 2 becomes the creative engine behind Gemini’s image tools, it might soon rival the best generators in the market.

The preview images shared online show smoother lines, balanced lighting, and more accurate anatomy than before. AI-generated people, places, and objects look less artificial and more like polished photos. The overall impression is that Google’s new model understands what makes an image look “real”, and how to reproduce that feeling consistently.

With this development, Google is not only refining how AI makes images but redefining how it thinks about creativity itself. The model’s ability to plan, correct, and deliver final visuals with less user frustration is a sign that AI artistry is entering a new stage of maturity.

Follow TechBSB For More Updates